Vision-based Navigation and Manipulation

From Robot Intelligence

Contents

Concept

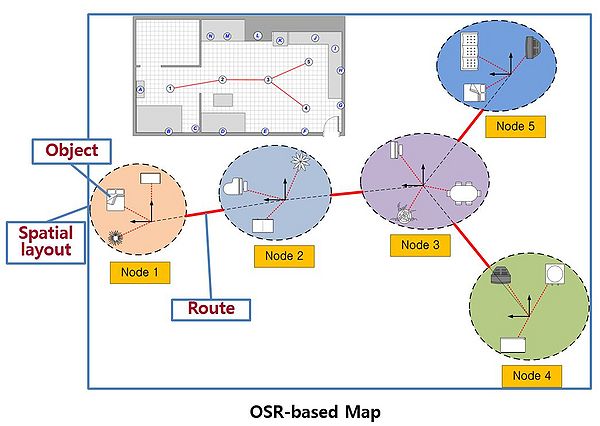

- As a map representation, we proposed a hybrid map using object-spatial layout-route information.

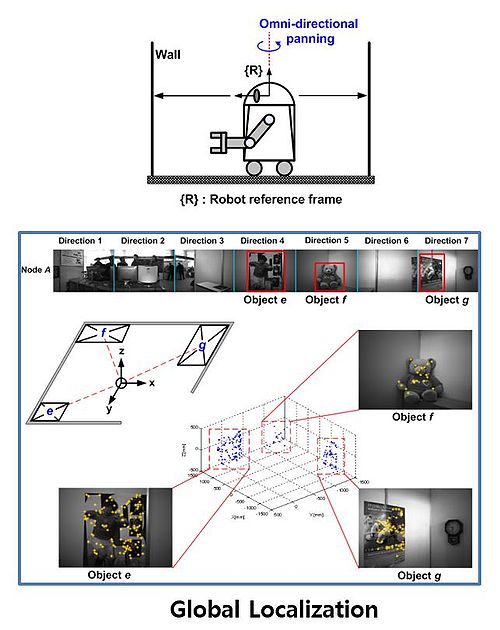

- Global localization is based on object recognition and its pose relationship, and our local localization uses 2D-contour matching by 2D laser scanning data.

- Our map representation is like this:

- The Object-based global localization is as follows:

Related papers

Unknown Objects Grasping

Concept

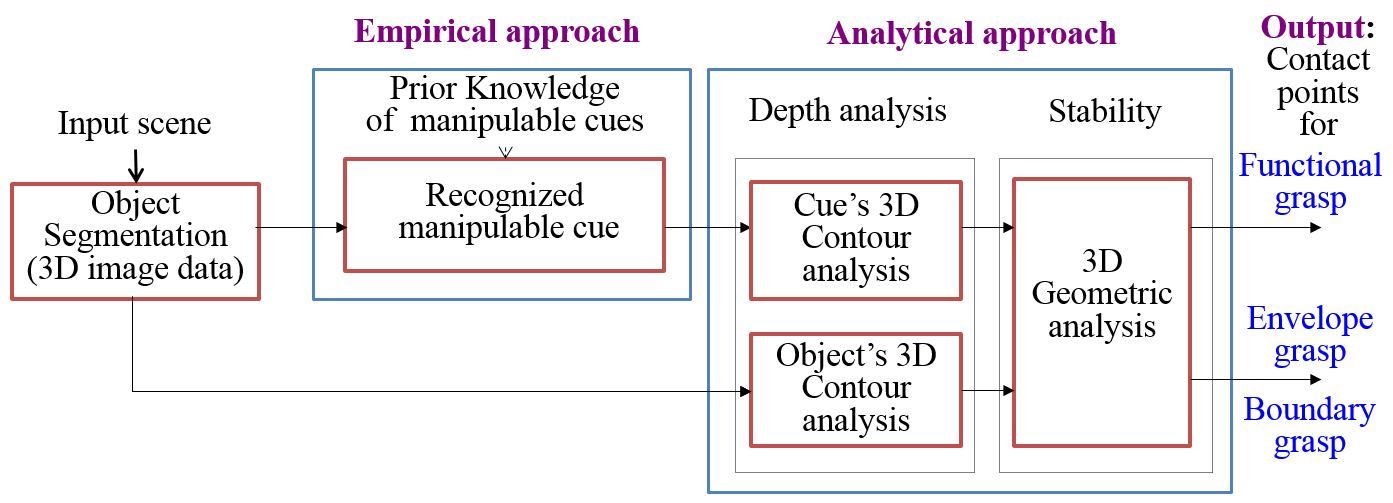

- With a stereo vision(passive 3D sensor) and a Jaw-type hand, we studied a method for any unknown object grasping.

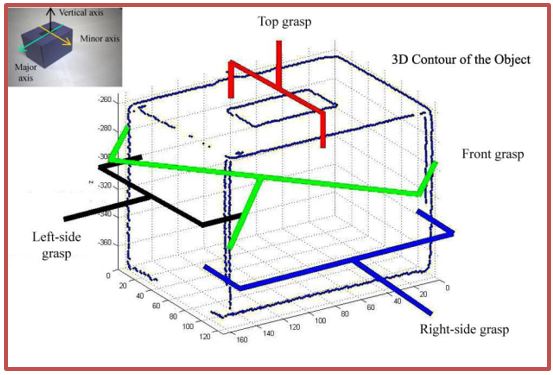

- In the context of perception with only one-shot 3D image, three graspable directions such as lift-up, side and frontal direction are suggested, and an affordance-based grasp, handle graspable, is also proposed.

- Our grasp directions are as follows:

- The schema of our whole grasping process is like this: