Difference between revisions of "3W for HRI"

From Robot Intelligence

| Line 1: | Line 1: | ||

| − | == | + | ==Project Outline== |

| + | *Research Period : 2012.6 ~ 2017.5 (5 years, 5st Phase) | ||

| + | *Funded by the Ministry of Trade, Industry and Energy (Grant No: 10041629) | ||

| + | |||

| + | ==Introduction and Research Targets== | ||

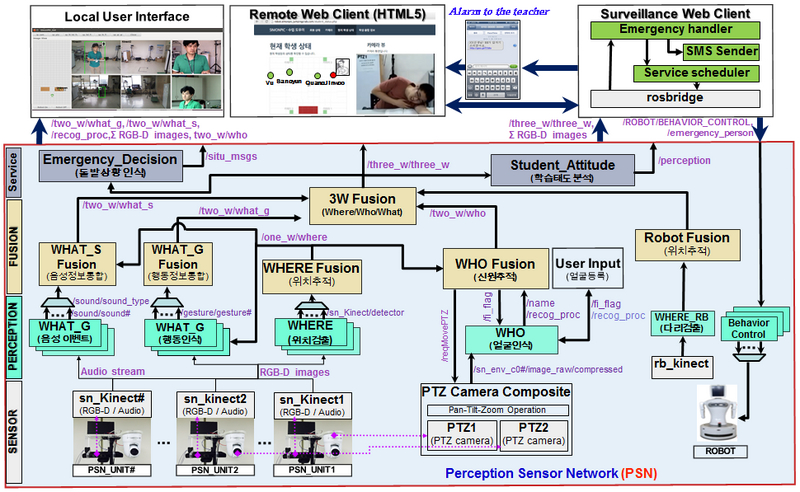

| + | 1. This project is for the purpose of the implementation of technologies for identification, behavior and location of human based sensor network fusion program. | ||

| + | - Reliably detect the occurrence of human-caused emergency situations via audio-visual perception modules | ||

| + | - Make an urgent SMS transmission to let someone know that an emergency event occurs, and relay spot information to them | ||

| + | - Perform an immediate reaction for the happening by using robot navigation and interaction technologies on behlaf of the remote user | ||

| + | 2. In this project we develop a robot-assisted management system for promptly coping with abnormal events in classroom environments. | ||

| + | |||

| + | ::[[File:Simonpic_overview.png|800px|left]] <br/> <br/> <br/> <br/> <br/> <br/><br/> <br/> <br/> <br/> <br/> <br/> | ||

| + | <br/> <br/> <br/> <br/> <br/> <br/><br/> <br/> <br/> <br/> <br/> | ||

| + | |||

| + | ==Developing Core Technologies== | ||

| + | <br><b>DETECTION</b> | ||

| + | *<b>WHERE</b>: Human detection and localization | ||

| + | *<b>WHO</b>: Face recognition and ID tracking | ||

| + | *<b>WHAT</b>: Recognition of individual and group behavior | ||

| + | *3W data association on the perception sensor network | ||

| + | |||

| + | <br><b>AUTOMATIC SURVEILLANCE</b> | ||

| + | *Automatic message transmission for human-caused emergencies | ||

| + | *Analysis of student attitude | ||

| + | *Remote monitoring via Web technologies and stream server | ||

| + | |||

| + | <br><b>ROBOT REACTION</b> | ||

| + | *Human-friendly robot behavior | ||

| + | *Gaze control and robot navigation | ||

| + | *Human following with recovery mechanism | ||

Revision as of 18:42, 19 December 2016

Project Outline

- Research Period : 2012.6 ~ 2017.5 (5 years, 5st Phase)

- Funded by the Ministry of Trade, Industry and Energy (Grant No: 10041629)

Introduction and Research Targets

1. This project is for the purpose of the implementation of technologies for identification, behavior and location of human based sensor network fusion program.

- Reliably detect the occurrence of human-caused emergency situations via audio-visual perception modules - Make an urgent SMS transmission to let someone know that an emergency event occurs, and relay spot information to them - Perform an immediate reaction for the happening by using robot navigation and interaction technologies on behlaf of the remote user

2. In this project we develop a robot-assisted management system for promptly coping with abnormal events in classroom environments.

Developing Core Technologies

DETECTION

- WHERE: Human detection and localization

- WHO: Face recognition and ID tracking

- WHAT: Recognition of individual and group behavior

- 3W data association on the perception sensor network

AUTOMATIC SURVEILLANCE

- Automatic message transmission for human-caused emergencies

- Analysis of student attitude

- Remote monitoring via Web technologies and stream server

ROBOT REACTION

- Human-friendly robot behavior

- Gaze control and robot navigation

- Human following with recovery mechanism